Hallucination

/həˌluːsɪˈneɪʃən/

What is an AI Hallucination?

An AI hallucination occurs when a large language model produces output that looks plausible but is factually wrong or unsupported by evidence. Unlike a standard software bug where a system might crash or return an error, a hallucinating LLM provides completely fabricated answers with the same tone of authority as correct ones.

Hallucinations can be categorized as:

- Factuality errors: The model states incorrect facts

- Faithfulness errors: The model distorts or misrepresents the source or prompt

Why Do LLMs Hallucinate?

The causes are both technical and systemic:

Technical factors:

- Noisy or incomplete training data

- The probabilistic nature of next-token prediction

- Lack of grounding in external knowledge

- Architectural limitations in memory and reasoning

Systemic incentives: OpenAI's 2025 paper "Why Language Models Hallucinate" explains that next-token prediction and benchmarks that penalize "I don't know" responses implicitly push models to bluff rather than safely refuse. Standard training rewards confident guessing over admitting uncertainty.

Hallucination Rates in 2025

The field has made significant progress:

- Top-tier models (Gemini-2.0-Flash, o3-mini-high): 0.7% to 0.9% hallucination rates

- Medium tier: 2% to 5%

- Average across all models: ~9.2% for general knowledge

Four models now achieve sub-1% rates—a significant milestone for enterprise trustworthiness.

Mitigation Strategies

Retrieval-Augmented Generation (RAG): The most effective technical solution. Instead of relying on internal memory, the system retrieves relevant documents from trusted sources, then feeds this context to the LLM.

Prompt Engineering: Being specific, providing context, instructing "think step by step," and explicitly allowing "I don't know" responses.

Calibration-aware training: New approaches reward uncertainty-friendly behavior rather than confident guessing.

Human-in-the-loop: Critical decisions require human verification.

The Naming Debate

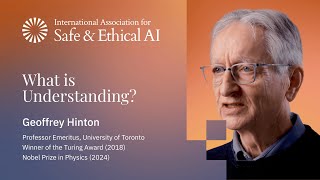

Geoffrey Hinton argues these should be called "confabulations" rather than hallucinations. Hallucination implies pathology; confabulation is normal human behavior—we construct memories rather than retrieve files. The distinction matters: confabulation suggests this is an inherent feature of generative systems, not a bug to be eliminated.

"We don't store files and retrieve them; we construct memories when we need them." — Geoffrey Hinton

The Shift in Thinking

The field has moved from "chasing zero hallucinations" to "managing uncertainty in a measurable, predictable way." Perfect accuracy may be impossible, but acceptable enterprise-level reliability is achievable.

Related Reading

- Confabulation - Hinton's preferred term

- Grounding - Connecting outputs to verified sources

- Geoffrey Hinton - Pioneer advocating for the confabulation framing