Confabulation

kon-fab-yoo-LAY-shun

Definition

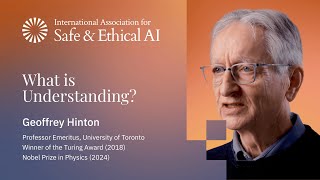

Confabulation is Geoffrey Hinton's preferred term for AI "hallucinations" - the phenomenon where models generate plausible-sounding but incorrect information. Hinton argues the term better reflects that humans do the same thing.

Why Confabulation, Not Hallucination?

"Hallucination" implies seeing things that aren't there - a pathological condition. "Confabulation" is a psychological term for memory construction, which is normal human behavior.

"Hallucinations should be called confabulations - we do them too." — Geoffrey Hinton

The John Dean Example

Hinton uses Watergate testimony as proof humans confabulate:

John Dean testified under oath about meetings with Nixon. He was trying to tell the truth, but was "wrong about huge numbers of details" - meetings that never happened, misattributed quotes. Yet "the gist of what he said was exactly right."

How Memory Actually Works

Neither humans nor LLMs store information like files:

"We don't store files and retrieve them; we construct memories when we need them, influenced by everything we've learned since."

This construction process can introduce errors - the same mechanism behind both human false memories and AI confabulations.

Implications

- Confabulation is normal - not a bug unique to AI

- Verification matters - for both human and AI outputs

- Confidence calibration - the real problem is not knowing when you're confabulating

Related Terms

- Hallucination - The common (but arguably misleading) term

- Grounding - Connecting outputs to verified sources