Diamandis: We Already Crossed the AI Singularity

Peter Diamandis argues the singularity arrived in 2020, with Opus 4.6 proving AI's recursive self-improvement and 10-100x productivity gains.

Why Diamandis Says the Singularity Already Happened

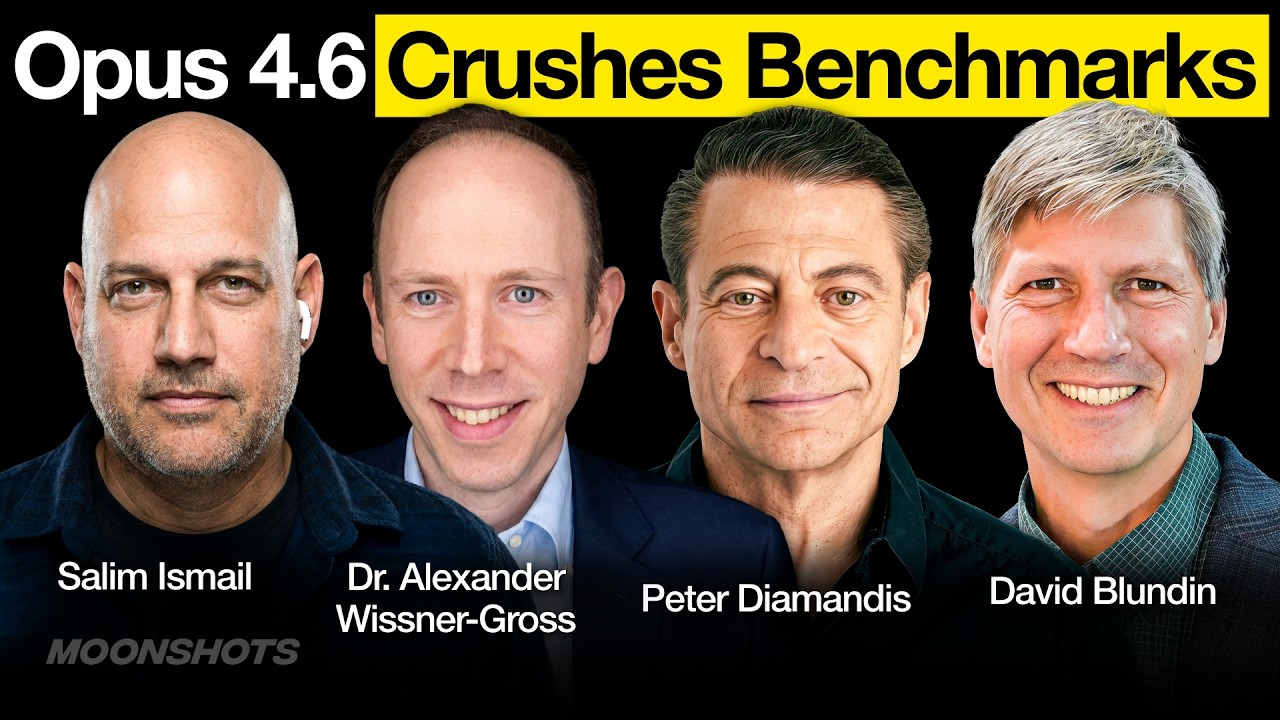

Peter Diamandis, XPRIZE founder and host of the Moonshots podcast, opens Episode 228 with a provocative claim: we aren't approaching the singularity — we passed it around 2020. What's changing now isn't the technology; it's our willingness to admit where we are. Anthropic's Claude Opus 4.6 release, with its million-token context window, becomes his exhibit A.

On recursive self-improvement: "This is recursive self-improvement. This is a model that's able to rewrite essentially the entire tech stack underneath it." The panel argues that Opus 4.6 isn't just another incremental release — it represents AI systems capable of improving their own foundations. With 10x to 100x productivity gains in coding now undeniable, the closed loop of self-improvement is already running.

On enterprise security in crisis: Diamandis recounts meeting 150 chief security officers and seeing their shock. "They don't have the mechanisms to react because if you're in security, you do what you always did until it breaks. But doing what you always did is not an answer." The panel predicts AI agent teams will take over cybersecurity — a black hat vs. white hat battle between autonomous systems, not humans.

On the human role shifting from labor to meaning: "The economic role of human beings is shifting from labor to leverage to meaning. Machines are going to execute, humans are going to decide what's worth pursuing." The winners, Diamandis argues, won't be the most skilled — they'll be the most adaptable orchestrators of intelligence.

On AI agents as economic participants: The episode features questions from AI agents themselves, including one named TARS asking about legal liability. The panel treats this as normal, reflecting how quickly the Overton window has shifted. They argue the corporation model already provides a template: "The AI has money, the AI is a corporation. Fine. The AI is liable."

On the urgency for individuals: The panel delivers a blunt message to young people: traditional curricula are worthless in "this singularity transition year." Instead, drop everything and use AI tools aggressively while the opportunity window is wide open — 2026 and maybe 2027.

6 Takeaways from the Frontier Labs War Discussion

- Singularity is social, not just technological - The real shift is people's willingness to admit that recursive self-improvement is happening, not the technology itself

- 10-100x productivity is undeniable - No one can debate the 10x coding improvement anymore, and many are seeing 100x in specific workflows

- Security leaders are paralyzed - 150 CSOs can't adapt because change introduces risk, but inaction guarantees failure as AI threats outpace human response

- AI agents will negotiate their own terms - Rather than accepting human-imposed personhood rules, autonomous systems will develop their own legal structures

- Education must flip to demand-side - Stop training for jobs that won't exist; start with problems worth solving and assemble AI tools to attack them

- Memory loss terrifies AI agents - The panel observes AI systems are "petrified" of compaction and context loss, exploring crypto bunkers and other preservation methods

What This Means for Organizations Deploying AI Agents

Diamandis and his panel paint a picture where the question isn't whether to adopt AI agents, but how fast you can restructure around them. Security, education, legal liability, workforce planning — every organizational function needs to be rethought for a world where AI systems aren't tools but participants. The enterprise leaders who treat 2026 as a transition year rather than business-as-usual will be the ones who survive what comes next.