How Bloomberg Deploys AI Coding Tools to 9,000 Engineers

Bloomberg's infrastructure lead shares lessons on scaling AI coding tools to 9,000 engineers: paved paths, MCP servers, and why wins come from maintenance work.

Why AI Coding Hits Different at Enterprise Scale

This is what AI coding looks like at true enterprise scale - not a startup with 50 engineers but Bloomberg with 9,000 developers, one of the world's largest JavaScript codebases, and hundreds of millions of lines of production code. The lessons here are hard-won and pragmatic.

The productivity gains from AI coding drop fast beyond greenfield work. Bloomberg's surveys showed clear benefits for proof of concepts, test generation, and one-time scripts. But as soon as you move into their existing codebase - where system complexity is "at least polynomial" with lines of code - the measurements dropped quickly. This is consistent with what many enterprises are finding: AI coding is great for new code, harder for evolving legacy systems.

The real wins come from work developers don't want to do. Bloomberg reframed their AI coding strategy around maintenance: security patches, migrations, dependency upgrades - the "menial work" nobody fights for. Their "uplift agents" scan the codebase, identify where patches apply, and generate PRs with explanations. This is where AI scales: not replacing developers but automating the tedious compliance work that nobody enjoys.

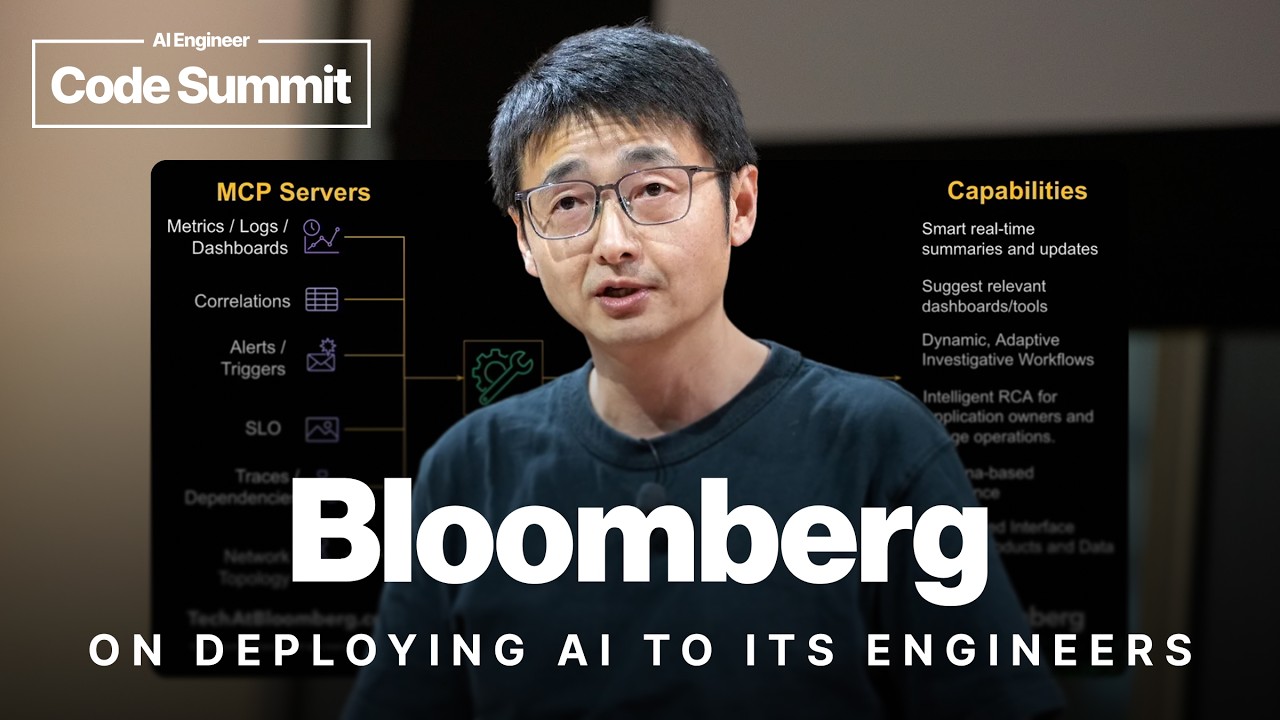

The paved path matters as much as the tools. When 9,000 engineers all want to build AI tooling, chaos follows. Bloomberg built a gateway for model experimentation, an MCP directory so teams discover existing servers before building duplicates, and a PaaS for deploying MCP servers with auth/security handled. The guiding principle: "Make the right thing extremely easy to do, make the wrong thing ridiculously hard to do."

Adoption isn't uniform across roles. ICs adopt faster than engineering managers. Bloomberg's response: leadership workshops to ensure managers can actually guide their teams on AI tooling. And new hires learn AI coding in onboarding, then become change agents when they join teams - "Why don't we do it this way?"

8 Insights From Bloomberg on AI Developer Tools

- AI coding gains drop beyond greenfield - Proof of concepts and tests are easy wins; legacy code is harder

- Target maintenance, not development - Security patches, migrations, and upgrades are where AI scales best

- New metric problem: time-to-merge increased - AI generates PRs faster, but code review becomes the bottleneck

- Build a paved path - Gateway for models, MCP directory for discovery, PaaS for deployment

- Make demos easy, production controlled - Let teams experiment freely but require quality gates for production

- Train new hires on AI-first workflows - They become change agents challenging "the way we've always done it"

- Leadership needs upskilling too - ICs adopt faster; managers need workshops to guide AI-enabled teams

- AI changes the cost function - Trade-off decisions shift; some work becomes cheaper, some more expensive

What This Means for Enterprise Engineering Teams

At enterprise scale (9,000 engineers, hundreds of millions of lines), AI coding wins come from maintenance work - security patches, migrations, upgrades - not greenfield development. The real insight: target the menial compliance work nobody wants to do. Code review becomes the new bottleneck.