Stanford: Your AI Coding ROI Might Be Negative

Two years of Stanford research reveals PR counts are misleading, code quality often drops with AI, and codebase hygiene predicts gains.

Why Stanford's AI Coding Research Matters for Engineering Leaders

This is the most rigorous research I've seen on AI coding tool ROI - two years of cross-sectional time-series data from Stanford, using a machine learning model trained on millions of expert code evaluations. The findings should make every engineering leader uncomfortable.

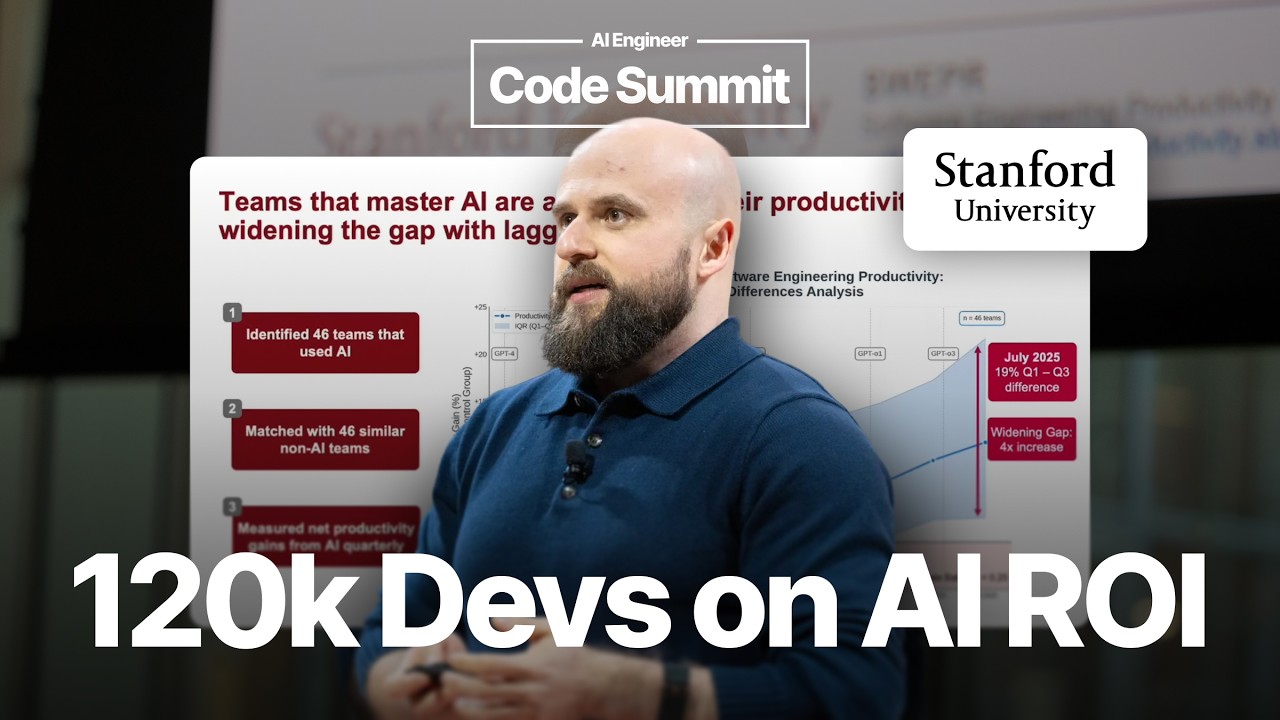

The gap between AI winners and losers is widening. Matching 46 AI-using teams against 46 similar non-AI teams, the median productivity gain is around 10%. But the variance is striking - and it's increasing over time. Top performers are compounding gains while strugglers fall further behind. If you're not measuring which cohort you're in, you're flying blind.

Token usage correlates loosely with gains - and there's a "death valley." Teams spending around 10 million tokens per engineer per month actually performed worse than teams using less. Quality of AI usage matters more than quantity. The real predictor? Codebase cleanliness. A composite score of tests, types, documentation, and modularity showed 0.40 R² correlation with AI productivity gains.

The case study is damning. A 350-person team adopted AI and saw PRs increase 14%. Leadership would have celebrated. But deeper measurement showed: code quality dropped 9%, rework increased 2.5x, and effective output didn't increase at all. The ROI might be negative - but without proper measurement, the company would have claimed millions in savings.

AI without hygiene accelerates entropy. Clean codebases amplify AI gains; messy codebases create a death spiral. AI generates code faster, engineers lose trust when outputs need heavy rewriting, adoption collapses. The framework matters: primary metric (engineering output, not PRs or LoC) plus guardrails (rework, quality, tech debt, people metrics).

8 Insights From Stanford on AI Coding Productivity

- Median AI productivity gain: ~10% - But variance is huge and widening between top and bottom performers

- Token usage doesn't predict gains - Quality of use matters more; there's a "death valley" around 10M tokens/month

- Codebase cleanliness is the key predictor - 0.40 R² correlation between code hygiene and AI productivity gains

- PR counts are misleading - One team showed 14% PR increase but 9% quality drop and 2.5x rework increase

- Access ≠ adoption ≠ effective use - Same tools, same licenses can produce wildly different results across business units

- AI accelerates entropy without discipline - Clean code amplifies gains; messy code creates trust-eroding death spirals

- Measure retroactively with git history - You don't need to set up experiments; analyze what already happened

- Guardrail metrics matter - Primary metric (output) + guardrails (rework, quality, tech debt) prevents Goodhart's Law

What This Means for Engineering Teams

Two years of Stanford research shows AI coding ROI might be negative for some teams - 14% more PRs but 9% quality drop and 2.5x rework increase. The biggest predictor of AI gains isn't token usage but codebase cleanliness. Clean code amplifies AI; messy code creates a trust-eroding death spiral.