Long-Running AI Agents Are Here: How to Build Agents That Work for Hours

Something remarkable is happening.

AI agents are no longer limited to quick tasks. They're building entire applications. Processing thousands of documents. Running multi-day research projects.

Anthropic just published research on agents that built a complete Claude.ai clone—200+ features across multiple sessions.

This is the shift from "AI assistant" to "AI worker." And it's happening now.

The Breakthrough: Agents That Sustain Work

For years, AI agents were stuck in single-conversation mode. You could get impressive results in one session, but anything requiring sustained effort? Not possible.

That's changed.

The key insight from Anthropic's research: with the right infrastructure, agents can work reliably across hours, days, even weeks.

Not theoretically. Actually. They demonstrated it by having agents build a production-ready web application from scratch.

"The harness provides context management capabilities that enable agents to work without exhausting token limits."

This is a fundamentally new capability. Let's look at what makes it work.

What Makes Long-Running Agents Possible

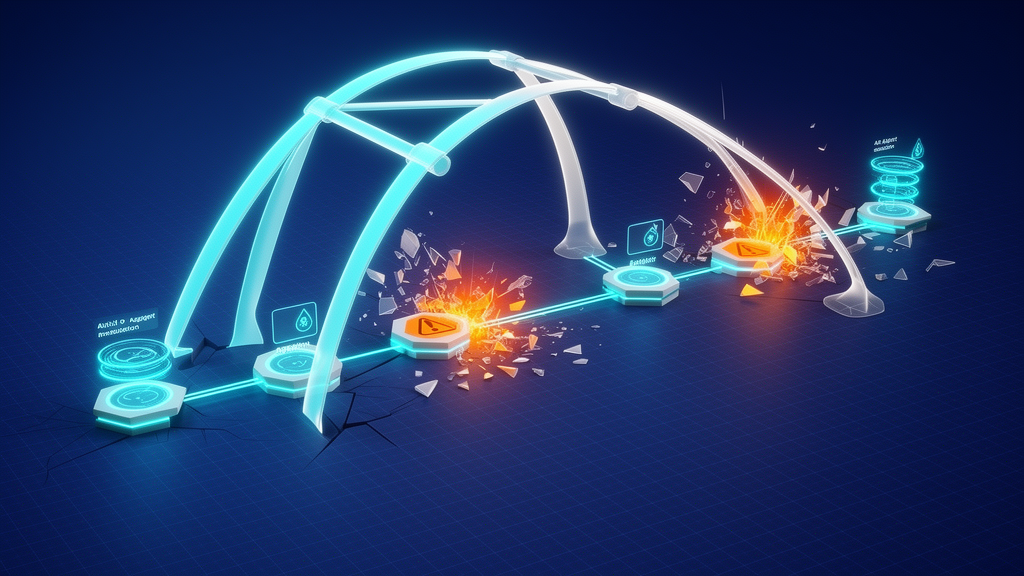

The Agent Harness Pattern

The breakthrough isn't a better model—it's better infrastructure around the model.

An agent harness is the scaffolding that enables sustained work:

| Component | What It Does |

|---|---|

| Context management | Summarizes older work to free tokens for new tasks |

| State persistence | Remembers decisions and progress across sessions |

| Environment setup | Each session starts from a clean, known state |

| Progress tracking | Structured files show what's done and what's next |

Think of it like shift handoff documentation for AI. Each "shift" (session) inherits everything from the previous one.

How Sessions Connect

Session 1: Initialize

├── Set up environment

├── Create progress tracking

├── Complete first milestone

└── Document state

Session 2-N: Continue

├── Load previous state

├── Pick up where left off

├── Complete next milestone

└── Document state

Final Session: Complete

├── Finish remaining work

├── Verify everything works

└── Clean handoff

The magic: Each session is independent, but the harness creates continuity.

Five Patterns That Unlock Long-Running Work

Anthropic's research identified what separates agents that sustain work from those that don't. Here are the patterns:

1. The Initializer Pattern

Start every project with structure.

The first session is special—it establishes the foundation:

# init.sh - How to run this project

npm install && npm run dev

# progress.txt - Where we are

Project: Customer Portal

Status: Initialized

Completed: Environment setup

Next: Implement authentication

Why it works: Every subsequent session knows exactly how to pick up the work.

2. Structured Requirements (JSON > Prose)

Give agents a checklist, not a novel.

{

"features": [

{"name": "User signup", "status": "complete", "verified": true},

{"name": "Password reset", "status": "in_progress", "verified": false},

{"name": "Session management", "status": "pending", "verified": false}

]

}

Why it works: Clear structure prevents scope creep and makes progress visible.

3. Milestone-Based Progress

Break big projects into clear checkpoints.

Instead of "build the application," structure work as:

- ✅ Authentication flow

- ✅ Database schema

- 🔄 User dashboard

- ⏳ Settings page

- ⏳ Export features

Why it works: Each session has a clear, achievable goal. Progress compounds.

4. End-to-End Verification

Trust, but verify—automatically.

The best results come from requiring actual verification:

// Before marking complete, agents run real user flows

await page.goto('/signup')

await page.fill('[name=email]', '[email protected]')

await page.click('[type=submit]')

// Verify the flow actually works

Why it works: Catches integration issues that unit tests miss. Anthropic saw 3.2x better bug detection with browser automation.

5. Clean Handoffs

End each session ready for the next.

Session-end checklist:

- ✅ All tests passing

- ✅ Progress file updated

- ✅ No uncommitted changes

- ✅ Next steps documented

Why it works: Next session starts building, not debugging.

The Results: What's Actually Possible

Anthropic's production test—building a Claude.ai clone:

| Metric | Result |

|---|---|

| Features built | 200+ |

| Sessions required | 8-12 |

| Features per session | 8-12 |

| End-to-end pass rate | 91% |

The key metric: pass^3 (reliability across consecutive attempts) reached 78%—production-ready consistency.

This isn't a demo. It's a proof point that sustained AI work is achievable today.

Two Metrics That Matter

When evaluating long-running agent performance, focus on:

pass@k: "Can it succeed?"

Probability of success in at least one of k attempts. Measures capability.

pass^k: "Does it succeed consistently?"

Probability of success in ALL k attempts. Measures reliability.

The gap between these reveals opportunity. An agent with 80% pass@1 but 51% pass^3 has room to improve consistency—and that's where the harness pattern helps most.

What This Unlocks for Organizations

Long-running agents open new possibilities:

Development Projects

- Build features across multiple sessions

- Refactor codebases systematically

- Process technical debt incrementally

Document Processing

- Analyze thousands of documents over days

- Extract and structure information at scale

- Maintain context across large corpora

Research & Analysis

- Multi-day research projects with synthesis

- Continuous monitoring and reporting

- Deep dives that would exhaust human attention

Operations

- Ongoing process automation

- Multi-step workflows with verification

- Tasks that span business hours

The shift: From "AI helps with tasks" to "AI completes projects."

Getting Started

If you want to build agents that sustain work:

1. Design for Sessions, Not Conversations

Think of each context window as a shift. What does the next shift need to know?

2. Invest in State Management

Progress files, git commits, structured requirements. This infrastructure is the enabler.

3. Automate Verification

Don't ask agents if they succeeded. Check automatically.

4. Start with Clear Milestones

Break work into achievable chunks. Let progress compound.

5. Measure Reliability (pass^k)

Capability is table stakes. Consistency is what matters for production.

The Opportunity Ahead

We're at an inflection point.

AI agents have moved from "impressive demos" to "sustained work." The infrastructure patterns are documented. The results are proven.

What's possible now:

- Agents that work on your codebase for hours

- Document processing that spans days

- Research projects that would exhaust human focus

- Operations that run continuously

The question isn't whether AI agents can do sustained work. They can.

The question is: what will you build with them?

Try It Yourself

TeamDay builds AI workflows that run reliably—with state management, verification, and the infrastructure that makes sustained work possible.

Build agents that complete projects, not just start them.

Sources:

- Demystifying Evals for AI Agents - Anthropic Engineering

- Effective Harnesses for Long-Running Agents - Anthropic Engineering